Single-Task vs. Multi-Task Prompting: What The Research Shows

Balancing Efficiency and Accuracy in Prompt Engineering

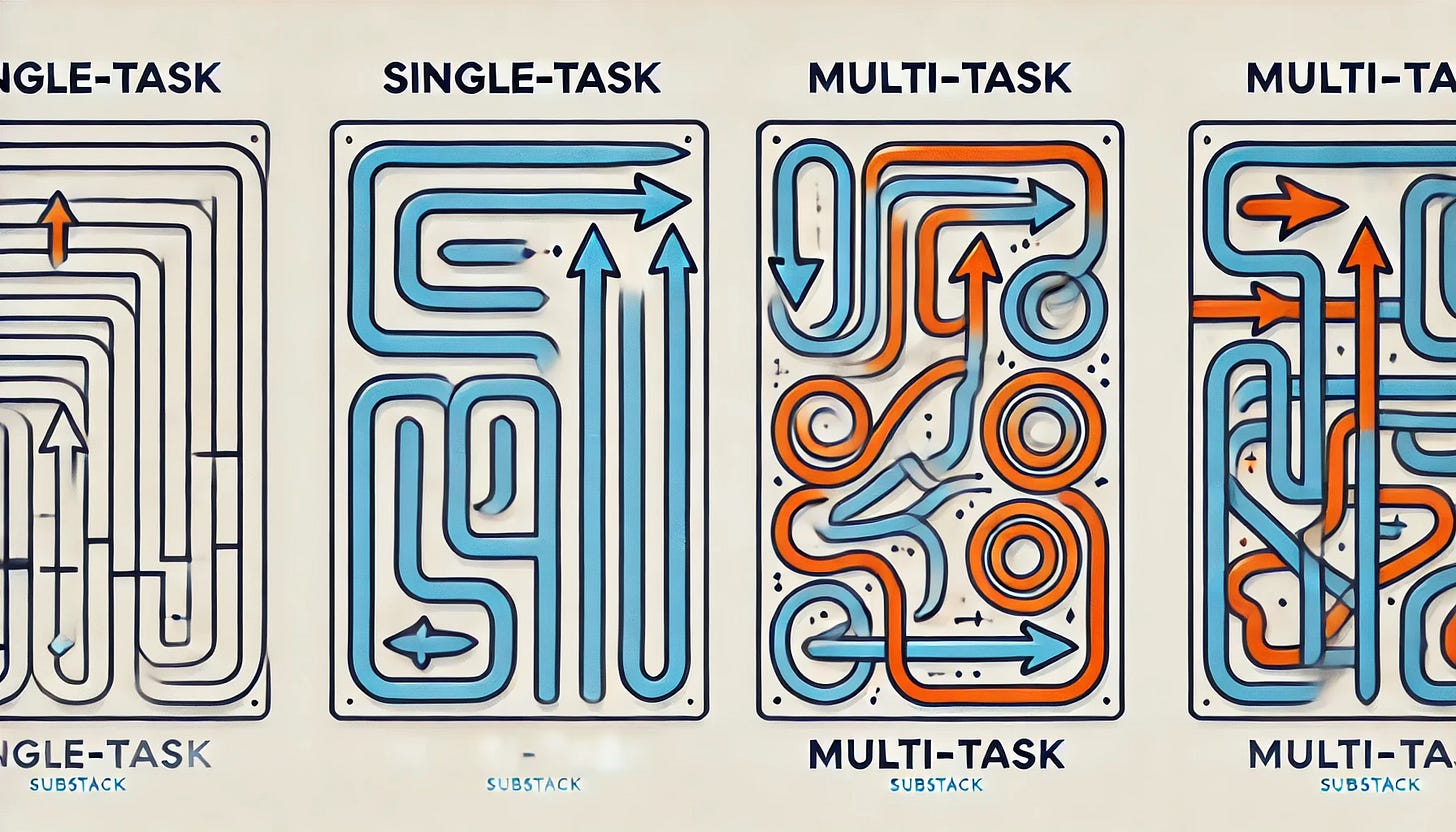

When designing prompts for Large Language Models (LLMs), a critical consideration is the number of tasks included within a single prompt. Here's the hard truth about recent research findings on single-task versus multi-task prompting.

The Research Is Clear

Single-Task Prompts Drive Higher Accuracy

Research from Sun Yat-sen University and Carnegie Mellon University confirms what many have suspected: prompts focusing on one task at a time consistently produce higher-quality results. Breaking work into separate prompts for drafting, critiquing, and refining isn't just cleaner—it delivers superior outputs.

Power Models Can Handle Multitasking

Think about it... Not all models are created equal. A study from Seoul National University demonstrates that advanced models like GPT-4 and LLaMA-2-Chat-70B can effectively handle multi-task prompts, sometimes outperforming single-task approaches when tasks share related contexts.

Architecture Determines Performance

The model you're using matters more than you think. Research in Electronics found that performance varies dramatically by model architecture. LLaMA-3.1 8B sometimes performs better with multitask prompts, while other models completely fall apart when given multiple instructions.

Cognitive Overload Is Real

Right now, thousands of developers are overwhelming their models. Analysis shows that multi-task prompts risk cognitive overload, causing models to neglect or misinterpret parts of your instructions. The result? Less accurate, less reliable outputs.

Efficiency Has Its Place

Google's research highlights a key advantage: multitask prompts can significantly reduce inference time and token usage when tasks share context. But this efficiency comes with a critical caveat—only if quality isn't compromised.

What This Means For You

Prioritize single-task prompts when precision, clarity, and detailed reasoning are non-negotiable, especially with smaller models.

Consider multi-task prompts only when efficiency is crucial AND you're using a powerful model AND your tasks are closely related.

Structure multi-task prompts with clear delineation to minimize confusion and cognitive bottlenecks.

The bottom line? The optimal number of tasks per prompt isn't universal—it depends on your specific model capabilities, task complexity, and whether you're prioritizing accuracy or efficiency. This is not a one-size-fits-all situation.

Links and Sources

1. Prompt Chaining Improves Summary Quality

• Source: “Prompt Chaining or Stepwise Prompt? Refinement in Text Summarization” by Sun Yat-sen University & Carnegie Mellon University

2. Multi-Task Inference Benchmark (Multi-tasking with GPT-4 & LLaMA-2-Chat-70B)

• Source: Seoul National University

3. Comparative Analysis of Prompt Strategies (Impact of model architecture on multitasking)

• Source: Electronics Journal (MDPI)

4. Prompt Engineering Overview by Anthropic (Decomposing complex tasks)

• Source: Anthropic official documentation

5. LLM-Based AI Agent Design Patterns (Cognitive overload from multi-task prompts)

• Source: Sahin Samia (Medium)

6. Google’s Prompting Best Practices (Efficiency of multitask prompts)

• Source: Google Cloud Documentation